Introduction

We provide RGB-D video and reconstructed 3D models for a number of scenes. The data can be used for any purpose with proper attribution. If you just want a 3D scene for some purpose, feel free to use these. If you use any of the data, cite our paper:

In general, if you do something interesting with the data, we'll be happy to know about it. Contact us at: Qianyi Zhou (Qianyi.Zhou@gmail.com) and Vladlen Koltun (vkoltun@gmail.com)

Data

The data was originally hosted on a server at Stanford University. Thus it was known as "Stanford 3D Scene Data" at that moment. It has been moved to a Google Drive folder since I left Stanford.

Additionally, in our latest project "Robust Reconstruction of Indoor Scenes", we have published a synthetic RGB-D dataset (thanks to my friend Sungjoon Choi) and reconstructed models from a set of SUN3D scans. Additionally, we have collected 10,000 dedicated 3D scans and reconstructed 398 mesh models.

Augmented ICL-NUIM Dataset SUN3D Scenes A Large Dataset of Object Scans

Format

Most scenes include a reconstructed model in .ply file format, an RGB-D video in either the .oni file format (requires OpenNI) or a zip file of individual color and depth images (both in .png format), and an estimated camera trajectory in a customized .log format (explained later).

Each RGB-D video is a continuous shot, taken with an Asus Xtion Pro Live camera at VGA resolution (640x480) and full speed (30Hz). Except "fountain" and "readingroom", The depth data is calibrated using the technique presented in "Unsupervised Intrinsic Calibration of Depth Sensors via SLAM", by Alex Teichman, Stephen Miller, and Sebastian Thrun, RSS 2013. The calibration partially removes low-frequency distortion from the depth data. In our SIGGRAPH 2013 paper this calibration step is crucial. In our later papers, we developed an auto-calibration approach and do no require explicit calibration any more.

The estimated camera trajectory is stored in a .log file. See this page for details. The following C++ code first translates a depth pixel (u,v,d) into a point (x,y,z) in the local coordinates, then transforms it to the world coordinates (xw,yw,zw). We assume that the transformation matrix Tk has been extracted from the .log file and that the Eigen library is used. All lengths are measured in meters.

fx = 525.0; fy = 525.0; // default focal length cx = 319.5; cy = 239.5; // default optical center Eigen::Matrix4d Tk; // k-th transformation matrix from trajectory.log // translation from depth pixel (u,v,d) to a point (x,y,z) z = d / 1000.0; x = (u - cx) * z / fx; y = (v - cy) * z / fy; // transform (x,y,z) to (xw,yw,zw) Eigen::Vector4d w = Tk * Eigen::Vector4d(x, y, z, 1); xw = w(0); yw = w(1); zw = w(2);

Preview

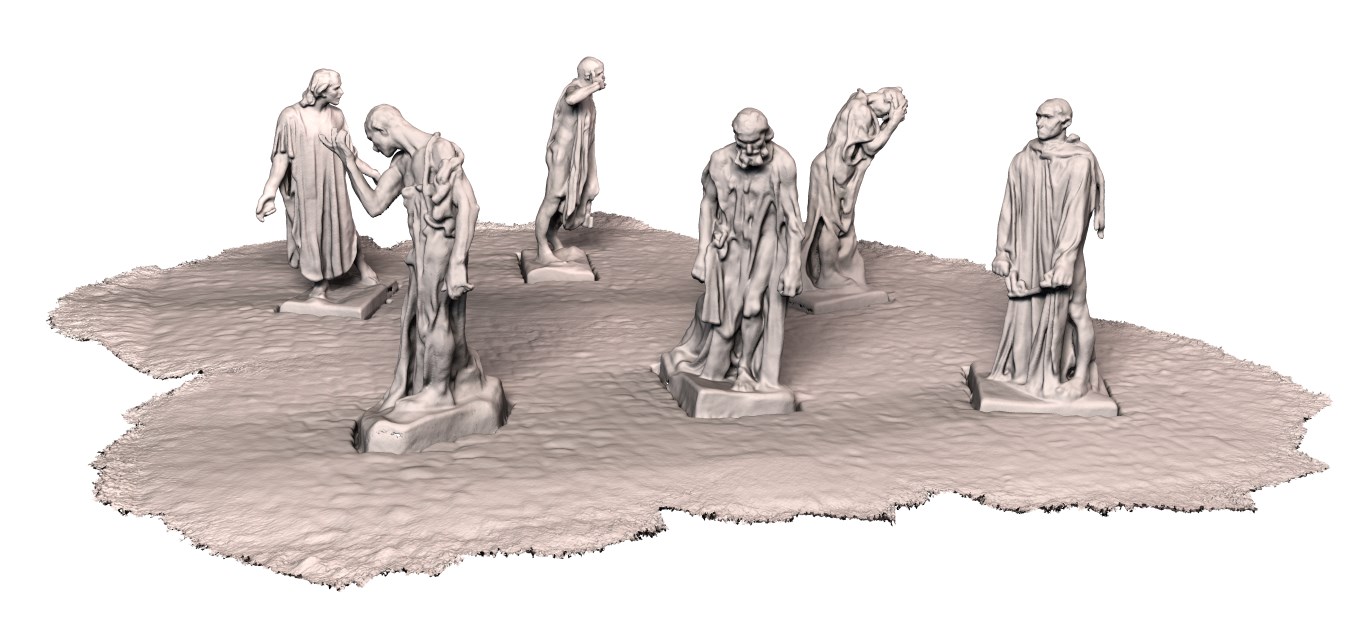

The Burghers of Calais

RGB video, Depth video, Model

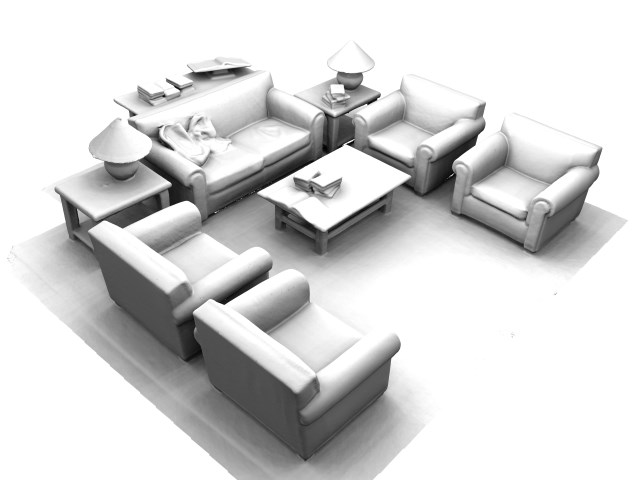

Lounge

RGB video, Depth video

Copy room

RGB video, Depth video

Cactus garden

RGB video, Depth video

Stone wall

RGB video, Depth video

Totem pole

RGB video, Depth video

Reading Room

RGB-D video, Model

Fountain

RGB-D video, Model